Simulation Continuous Deployment

Several months ago I got a new roommate. His TV was much bigger than mine, so there was no argument over putting his 70 inch 4K TV in the living room, replacing my puny 32 inch 720p TV. I retired my tiny TV to collect dust on a shelf in the back of my closet.

But it gnawed on me that it was just sitting there doing nothing. Surely I could find some use for it! After a while, I started thinking that it might be cool to mount it in our dining area and hook a Raspberry Pi up to it to display something. But what? Smart mirrors sure are cool these days. But the thing that kept coming back to me was this youtube series. Some guy got the crazy idea to play Minecraft for years, just walking from his spawn point, to the literal end of the Minecraft world, exploring the procedurally generated world. Wouldn’t it be great to automate that process?

Not long before that, I informally participated in Ludum Dare 40. As usual, I focused way too hard on building up infrastructure and basically forgot to build a game. Instead, I had a nifty little build system marrying Docker and GNU make to compile an anemic OpenGL application. It wasn’t much to look at. It certainly wasn’t as interesting as Minecraft. But it was better than nothing. And hey, displaying it on the TV might give me some motivation to work on the project a little more!

I thought about the idea a little more deeply. It wouldn’t be as simple as just plugging in a raspi via HDMI and watching it go. My game compiles for x86, not arm. And cross-compilation wasn’t a rabbit hole I particularly wanted to go down. And even if I did, my tiny game uses per-pixel lighting. I figured there was no way I’d be able to achieve a reasonable frame rate on a raspi. (what is the state of hardware accelerated 3d graphics on raspberry pi, anyway?)

So I decided rendering would have to be done on some other system. I had a few older x86 desktops lying around that I figured should fit the bill. I even had them set up headless, hanging off of a switch so that I could play around with a home Kubernetes cluster a while ago. At this point, I had two options: plug a conspicuous desktop directly to the TV via HDMI or pump pixels over wifi to the tiny, concealable pi. How hard could it be to render to a networked video stream anyway?

At this point, the basic data flow made sense. I run the application on my headless desktop, render to a stream, and pump packets to the pi, who constantly runs a video client. (maybe VLC?) But you and I are serious about continuous integration and continuous delivery. Knowing human psychology, would I really be diligent enough to update the version of the application running on that desktop every time I made a change. Not a chance.

So, without consulting XKCD’s “Is It Worth The Time” chart, I set down the path toward continuously delivering a 3d simulation application to a TV in my apartment.

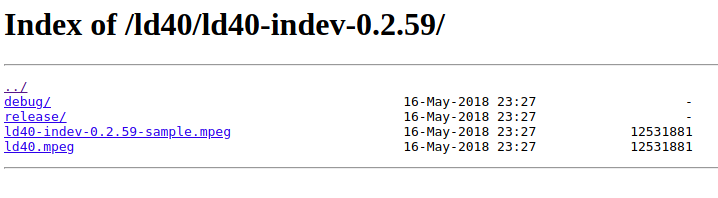

My workflow was very simple. Whenever I pushed to the master branch of my simulation’s repo, I wanted to trigger an automated build of the application, run the test suite, publish artifacts to a central store, and finally, update the desktop application (which decide to call the staging environment) with the new version. Magically, the pi would be receiving a video feed with the new version.

So I drew a picture that looked something like this.

Not a terribly complicated beast.

A lot of the services in the picture have corresponding sevices available in a public cloud. I could use github, travis, dockerhub, and conan. But I also wasn’t sure if I was writing a game that I would eventually go on to sell. I decided that I needed to use private infrastructure. If I ever choose to open source it, I can always make that move then. You can never go in the other direction.

I decided on a few subgoals:

- render uncompressed pixels to a file

- render on a headless machine

- render compressed pixels to a file

- stream video to any networked client

- stream video to the TV-mounted Raspberry Pi

- hook the whole thing into CI

At first, I wondered if I was being too granular by writing down such a detailed list. I thought this whole thing would be really easy. In hindsight, I’m glad that I did. A lot of these tasks took more research and slog than I would have imagined.

At this point, my tiny little game was pretty unimpressive. It was basically a few wandering cube creatures on a one-voxel thick chunk. I hadn’t put a lot of thought into coloring.

I certainly hadn’t thought about a non-interactive mode. When the game started up, it put you at an immmensely unsatisfying angle. I’d forgive you if you thought this game was actually 2D.

This thing did not start out in a pretty state. Dark colors. Boring geometry. Simple agent behavior. But that’s okay. My goal with this whole project was to set up a CD pipeline as an incubator for something much larger. Making the game/simulation impressive will come with time.

So the first change I needed to make was to introduce some sort of non-interactive mode. My endgoal was to have a camera that automatically explored a fantastical procedurally generated world. That probably means some level of AI attached to the camera itself. Not wanting to bear that burden all at once, I settled on an incredibly simple algorithm. The camera would circle the voxel chunk while facing the center.

This took me an hour at most. It’s interesting how some of the changes that make the biggest visual impact are by far the easiest, while the really hard things rarely make an impression on passersby.

While implementing this, I added a CLI parser so I could decide on program startup whether or not the session would be interactive. This was a good decision; I added to the CLI options with every new subtask.

The next goal was to dump uncompressed pixel data from opengl to a file as a proof of concept. This turned out not to be too difficult. I did have to make a concession and run an X server on each node in my server farm. Some research told me that with EGL, I would have been able to do without the X server.

I’m using GLFW so I can easily port to Windows and Mac in the future. For a while I started down the path of using GLX as a windowing system for Linux and GLFW for everything else. As I put fingers to keyboard on this, I became more and more dissatisfied with the fact that I was essentially redoing what GLFW had already done: act as an abstraction layer for opengl window systems.

I abandoned that option and instead decided to compromise. I put an X server on each node in the server farm. Now, they would all be able to render the simulation headless.

Enter LibAV

Coming out of the gate, compressing the video stream was the biggest unknown. I had a vague notion that ffmpeg could be used as a C library to encode and decode video, but I had never looked at the API.

So I dove into the documentation. It’s idiomatic C code, so the variable names are basically obfuscated. This example was the first thing that I really latched onto. I roughly adapted it to do what I thought I’d need. Unfortunately, it didn’t even compile. If you look at the top, you’ll notice that copyright is from 2001. Whoops!

The API has changed a fair bit since then. They’ve introduced things like reference counts to their data structures.

After modernizing my code and GDBing my way out of a few runtime errors, I was writing compressed video to a file!

…Unfortunately, it was upside down.

Turns out, opengl puts its pixels rows in an order opposite to LibAV. I vaguely remembered a post from years ago on codeproject where someone was doing exactly what I was doing now – rendering from opengl to a file. He mentioned that opengl natively orders its rows from bottom to top. Since this operation would be in such a tight loop, he wrote some inline assembly to put them in the order he was looking for.

No thank you! I did not want to have to write assembly to solve this problem. So I crawled through the libav documentation to see if they had a supported solution.

Not much later, I stumbled upon libavfilter, a module within libav. It allows you to construct a graph of transformations to perform on your video and audio streams. And one of them was exactly what I needed.

After a little bit more fumbling with the API, I had a properly oriented video stream.

When I wrote down “render to file” as a subgoal, I had only meant it to serve as a proxy task. I hadn’t intended to derive any value from it. But looking at the files that I generated, I couldn’t deny that they were pretty cool. So I added a step to the CI build that records a short video and posts it alongside the other artifacts for that version of the simulation.

What if we had autogenerated screenshots for every build of popular games? I would spend hours poring over the early builds of Minecraft to see how something we all know and love evolved.

Streaming the Video

The next goal was to actually stream the video to a networked client. I immediately jumped to the simplest tool I knew of: simple unicast UDP. VLC seemed to support it. I coded this portion directly using Linux’s socket API. By this point, I had already decided that all video streaming functionality would be Linux-only. I don’t want to have to compile libav and x264 custom for Mac or Windows.

But more importantly, both of those projects are licensed under the GPL. So I decided that I will only use builds linked to libav as an internal tool. Those are off the table for public release unless I decide to open source the whole project.

The unicast UDP option worked like a charm. But along the way, I discovered another protocol: RTP. One of its design principles is application-layer framing. Basically, RTP doesn’t put its audio and video streams in a container and it doesn’t use TCP. In certain situations, it doesn’t make sense to retransmit lost data. I wouldn’t want to resend a frame I dropped two seconds ago.

On a pretty basic level, this idea makes sense. And it lines up with what I’ve heard about how folks implement MMOs. Those studios tend to implement their client network protocols on top of UDP rather than TCP and selectively retransmit when it makes sense in the context of the game.

My interest was piqued, but I ultimately decided it wasn’t worth my time right then. I don’t have audio in the simulation yet and my unicast UDP solution seemed to be working just fine. I read the RFC and decided to put a story on my backlog to come back to integrating with RTP later.

Putting it all Together

The last two steps were to mount the Pi to the TV and upgrade the staging environment on every build. Both of these tasks required persistent, resilient services. I wanted both the Pi’s video client and the staging environment to come back in case of a power outage or any other reboot. I also wanted to apply immutable infrastructure to both. I didn’t want to just rely on one-time configuration. What if either the Pi or my server farm kicked the bucket?

I decided to wrap both of these services up in debian packages. As a final step within the simulation’s pipeline, I simply run

dpkg -i ld40-staging.deb && systemctl restart simulation-staging

Seconds later, the video on the TV stutters and the new environment appears. I got unreasonably excited the first time I saw this whole flow work together.

I had actually left a stream running continuously on the Pi since I initially got video streaming working on the Pi. The first night, my roommate commented that it was too bright – he’d have to shut his door every night. So I started manually shutting it off just before bed and turning it on in the morning.

I remembered hearing about HDMI CEC. I thought I remembered playing with it a long time ago, but hadn’t had much success and gave up. I decided to give it another stab. If it actually worked, it would make this whole thing that much more self-sustaining.

I can’t lie. Turning off your TV over SSH is a pretty great experience. And all it takes is a simple bash one-liner:

echo "standby 0" | /usr/bin/cec-client RPI -s -d 1

So I set up a pair of cron jobs: one to turn off the TV at 10:30PM and another to turn the TV on at 6:00AM. It’s still working like a charm.

One-Man Agile

A while ago, I got into the habit of creating a todo.txt file at the root of my

personal projects. It’s a simple checklist of things that I’d like to achieve in

the project. I’ll check things off as I complete them and add more as they come

to mind.

Having it all written down somewhere allows me to focus in-depth on one aspect of the project without endangering the ideas I have that are little more than vague threads at the fringes of my mind.

The todo.txt file for this particular project became massive a few weeks in. I could not

possibly maintain this thing. I was already starting to accidentally add

duplicate items because I couldn’t fit it all on the screen at once.

It was about this time that I started heavily using Gitlab to host the project.

It offers an “issue tracking” system featuring a kanban. So I figured I would

just migrate the items in my todo.txt file to the issue tracker for my

simulation repo.

While doing that, I got into my agilist state of mind and decided to flesh out my feature and stories. I wrote context, validation criteria, and acceptance criteria for everything. At the time, I thought it was a bit of self-indulgent overkill. I could not have predicted how drastically it would affect the way I interact with this project. It’s not an understatement that this blog wouldn’t exist if I hadn’t migrated my checklist to that kanban.

Writing acceptance criteria meant that I had to force myself through the bits that turned into a slog. This is usually where personal projects run ashore. Things get a bit tought so you decide to compromise quality or just give up entirely.

Writing validation criteria gave me context for why I was doing what I was doing and forced me to think critically about the value I was deriving from the whole project. Without sharing this project, there was little reason in continuing. So I added a whole series of stories for setting up this blog.

And of course I had to apply the same definition of done for those stories. So this blog is being continuously delivered from source control as well.

Working though these well-defined stories also helped me to appreciate the relationship between the various roles in Agile. When I wrote the acceptance criteria for stories, I did so with an understanding of what constraints needed to be satisfied to derive value from the task being done. But sometimes I overstepped. My acceptance criteria weren’t general enough. And when I got down to development, I realized that I needed to break the letter of the AC to achieve the spirit of the story.

When the product owner and developer are different people, this process can be much more difficult. It’s important that it be done from a place of understanding of the other person’s perspective.

In general, the complex ceremonies that have arisen around development are about facilitating parallelization of work. There are compromises made to individual efficiency to enable many individuals to work all at once. Whenever multiple people work together, especially in an environment as information-heavy as software engineering, there is substantial overhead in communication. It’s always going to be easier to keep information in your head than to attempt to share it with others.

The Future of the Simulation

One of my stated goals with continuously deploying to the TV was to build up excitement and motivation to work on the project. The results completely exceeded my expectations. There’s something visceral about having a video feed displayed on your wall. And seeing your changes reflected there an hour later when you go to the kitchen to make a sandwich is very rewarding.

So I thought about the future of the project. A lot. I started piling more and more milestones into my backlog on Gitlab. I thought through different use cases and read so many research papers that I actually decided to start a research paper community of practice at work.

Making small changes to make what was on the TV more interesting became hard to resist.

Eventually, what started as a small ludum dare game project had grown in my head into a distributed, horizontally scalable, always on, procedurally generated, AI driven simulation. This is a laughably ambitious idea. I’ll probably never get to all of that, but between the focus that validation criterion-driven development puts on delivering incremental value and the excitement that this process has built for the project, I don’t plan on tabling this project soon. You can expect to hear plenty more about it right here.